Introduction – What are Foundation Models in Generative AI?

In the past few years, artificial intelligence (AI) has rapidly evolved from narrow task-specific systems to highly versatile models capable of handling a wide range of applications. At the center of this transformation lies the concept of foundation models—large-scale AI models that serve as the backbone for numerous downstream applications in natural language processing (NLP), computer vision, robotics, and multimodal systems.

A foundation model is a large AI model trained on massive amounts of diverse data, such as text, images, code, or audio, using self-supervised learning techniques. Once pre-trained, the model can be adapted to specialized tasks through fine-tuning, prompt engineering, or reinforcement learning. This flexibility makes them the “foundations” upon which other applications are built.

The term “foundation model” was first introduced by the Stanford Institute for Human-Centered AI (HAI) in 2021. Unlike earlier AI approaches, which relied on narrow models built for specific tasks, foundation models are designed to generalize knowledge across different domains. For example, a single foundation model like GPT (Generative Pretrained Transformer) can power chatbots, summarization tools, code generators, and even creative writing platforms—all without requiring separate models for each task.

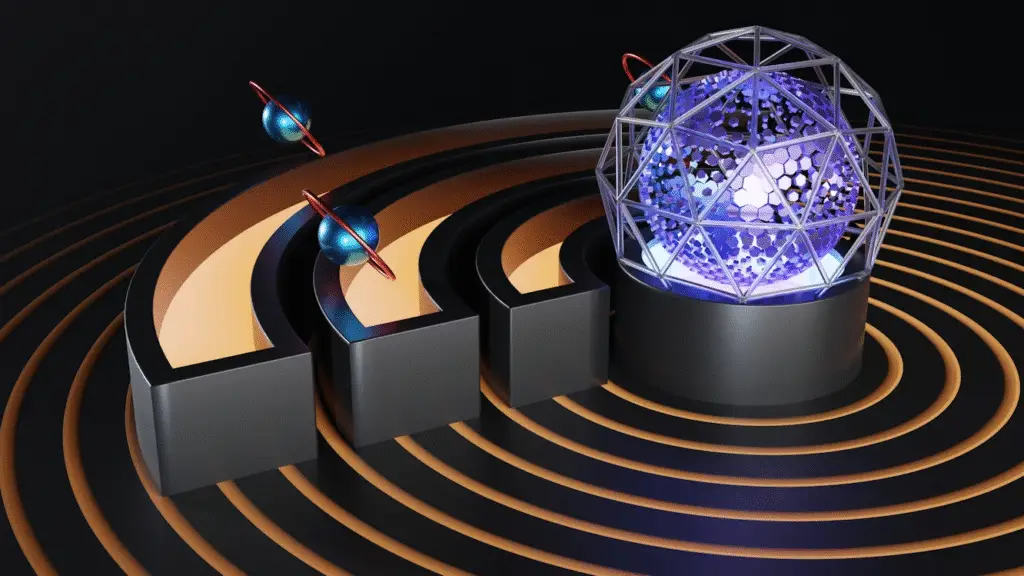

What makes these models revolutionary is their scale and adaptability. Trained on billions or even trillions of parameters, they can capture intricate patterns in data that smaller models cannot. More importantly, they are not limited to a single form of input. Some foundation models are multimodal, meaning they can process and generate across multiple data types—for instance, generating images from text (like Stable Diffusion) or analyzing video and audio alongside language (like Google’s Gemini).

Generative AI, a subfield powered by foundation models, focuses on creating new data rather than just analyzing existing data. This includes generating realistic images, composing music, writing essays, designing products, or simulating scientific molecules. The synergy between generative AI and foundation models is driving a new wave of innovation across industries.

To illustrate:

- In business, foundation models are automating customer service, generating marketing content, and analyzing financial trends.

- In science, they accelerate drug discovery by predicting protein structures.

- In creativity, they enable artists, musicians, and writers to co-create with AI tools.

However, foundation models are not without challenges. Their development requires enormous computational resources, raising questions about energy consumption, access inequality, and ethical risks. Additionally, because they are trained on large internet datasets, they can reproduce biases or generate inaccurate content.

Despite these concerns, foundation models are widely seen as a paradigm shift in AI development, offering a scalable, flexible, and adaptable approach that could shape the future of digital innovation.

As we progress through this article, we will explore how foundation models evolved, what makes them unique, their real-world applications, challenges, and the road ahead for this rapidly advancing field.

Evolution of Generative AI – From Narrow AI to Foundation Models

Artificial Intelligence has gone through several paradigm shifts over the past few decades. Each stage has pushed AI closer to human-like intelligence, with foundation models representing the most advanced leap so far. To understand their importance, it’s essential to trace how AI evolved—from rule-based systems to machine learning, then to deep learning, and finally to foundation models that power today’s generative AI.

1. The Era of Narrow AI (1950s–1990s)

Early AI systems were rule-based and designed to solve very specific problems. These systems relied heavily on human-created instructions. For instance:

- Expert systems in the 1980s could diagnose diseases or help troubleshoot machines by following predefined logic.

- Chess-playing programs used hardcoded strategies to simulate intelligence.

While effective for structured tasks, these models lacked flexibility. They couldn’t adapt beyond their programmed knowledge, earning the label “narrow AI.”

2. Rise of Machine Learning (1990s–2010s)

With the explosion of digital data and computing power in the 1990s, machine learning (ML) began to replace rule-based programming. Instead of hardcoding solutions, ML allowed models to learn from data patterns.

Examples include:

- Spam filters that adapted to new types of unwanted emails.

- Recommendation engines like those used by Amazon and Netflix.

- Fraud detection systems in banking.

The key advantage was adaptability: ML systems could improve as more data became available. However, they still required domain-specific datasets and feature engineering—meaning humans had to decide what variables the model should learn from.

3. The Deep Learning Revolution (2010s)

The 2010s saw the rise of deep learning, powered by neural networks with many layers. This shift was enabled by advances in GPU computing and access to large datasets.

Deep learning models like convolutional neural networks (CNNs) and recurrent neural networks (RNNs) excelled in tasks such as:

- Image recognition (e.g., identifying objects in photos).

- Speech recognition (e.g., powering Siri and Google Voice).

- Language translation (e.g., Google Translate improvements).

For the first time, AI systems could automatically extract features from raw data without explicit human engineering. Yet, these models were still task-specific. A model trained for translation couldn’t perform sentiment analysis without retraining from scratch.

4. Emergence of Foundation Models (2020s)

The true breakthrough came with transformer architectures, introduced in 2017 by the paper “Attention Is All You Need.” Transformers enabled models to handle sequences of data more efficiently, particularly in natural language.

This innovation laid the groundwork for large-scale pretraining on massive datasets. Instead of building separate models for each task, researchers trained a single model on a diverse range of internet-scale data. The resulting models—such as GPT-3, BERT, LLaMA, and Stable Diffusion—demonstrated remarkable generalization.

Key differences from earlier models:

- Scale: Foundation models have billions or trillions of parameters, far larger than traditional deep learning models.

- Adaptability: A single pre-trained model can be fine-tuned for multiple tasks.

- Generativity: They don’t just analyze data—they generate new text, images, code, or audio.

5. Foundation Models & Generative AI

Generative AI thrives on foundation models because they are capable of synthesizing new content based on learned patterns. For example:

- GPT models can write essays, generate poetry, or write code.

- Stable Diffusion can create unique images from text prompts.

- DALL·E can blend visual concepts in ways never seen before.

This adaptability marks a departure from narrow AI. Instead of building thousands of specialized models, developers can leverage a single foundation model to power countless applications.

6. Why This Evolution Matters

The move from narrow AI to foundation models represents a shift from scarcity to abundance in AI capabilities:

- Efficiency: Developers don’t need to start from scratch for each task.

- Accessibility: Non-experts can harness AI power through simple prompts.

- Creativity: AI is no longer just an analyzer—it’s a co-creator.

As a result, foundation models are not just another step in AI evolution—they are a paradigm shift that unlocks possibilities across industries, from healthcare and education to entertainment and finance.

Key Characteristics of Foundation Models

Foundation models stand out from earlier AI systems not only because of their scale but also because of their versatility, adaptability, and generative power. To fully appreciate their role in shaping the future of generative AI, let’s explore the defining characteristics that make them unique.

1. Large-Scale Training on Massive Datasets

One of the hallmarks of foundation models is their training on internet-scale datasets that span text, images, code, audio, and video. Unlike traditional models that rely on narrow, domain-specific data, foundation models learn from diverse sources—ranging from Wikipedia and research papers to social media and multimedia archives.

- Why it matters: This breadth of data enables the models to capture not only facts but also context, tone, and style.

- Example: GPT-4 can switch seamlessly from writing a scientific summary to drafting a marketing campaign because it has been trained on both technical and creative texts.

2. Billion-Parameter Scale

Earlier machine learning models had millions of parameters, while foundation models often have billions or even trillions. Parameters are the internal weights that the model adjusts during training to capture patterns in data.

- Benefit: A larger parameter space allows models to represent more complex relationships and subtleties.

- Analogy: Think of parameters like memory. The more memory a system has, the more information it can store and recall.

3. Transfer Learning and Adaptability

Traditional AI models often had to be retrained from scratch for every new task. Foundation models, by contrast, use transfer learning: a pre-trained model can be fine-tuned or adapted to new tasks with minimal additional data.

- Example: A language model trained on general text can be fine-tuned for legal document review or medical diagnosis.

- Impact: This makes AI development faster, cheaper, and more accessible for industries with limited datasets.

4. Multimodality – Beyond Text

Many foundation models are multimodal, meaning they can process and generate multiple types of data, such as text, images, audio, and video.

- Examples:

- CLIP (by OpenAI) connects text and images, enabling applications like image search by description.

- Stable Diffusion generates detailed images from text prompts.

- Whisper processes speech and transcribes audio.

- Why it matters: Multimodality allows for seamless integration of AI into creative industries, healthcare imaging, and even robotics.

5. Generative Capabilities

Perhaps the most defining feature is the ability to create new content rather than just analyze existing data.

- Applications:

- Writing human-like essays or stories.

- Generating new images, music, or videos.

- Creating functional software code.

- Importance: This generativity opens doors to innovation in art, design, marketing, and product development, making AI not just a problem solver but also a creative partner.

6. Emergent Abilities

Foundation models often demonstrate emergent abilities—skills that were not explicitly programmed or expected. These arise because of their scale and the diversity of training data.

- Examples:

- Performing basic math even without being designed as a calculator.

- Understanding idioms, metaphors, or cultural nuances.

- Translating between languages not explicitly included in training.

- Implication: This unpredictability can be both powerful and challenging, as models sometimes perform surprisingly well in tasks outside their training scope.

7. Few-Shot and Zero-Shot Learning

Unlike earlier AI models that needed thousands of labeled examples, foundation models can perform few-shot or even zero-shot learning:

- Few-shot: The model learns from just a handful of examples.

- Zero-shot: The model performs a task it has never seen before, simply based on instructions in natural language.

- Example: Asking GPT to “summarize this research article in plain English” works without requiring task-specific retraining.

8. Continuous Improvement Through Fine-Tuning

Another key characteristic is that foundation models are living systems—they can be continually updated with new knowledge through fine-tuning, reinforcement learning, or domain-specific adaptation.

- Impact: This allows businesses to build custom solutions without reinventing the wheel.

- Example: Companies fine-tune GPT models for internal customer support chatbots that reflect their brand voice.

9. General-Purpose Intelligence

Foundation models blur the line between specialized AI and general-purpose intelligence. While they are not “true AGI” (Artificial General Intelligence), their ability to perform a wide variety of tasks gives them a quasi-general intelligence quality.

- Significance: This versatility makes them ideal as the backbone of generative AI, capable of powering countless applications across domains.

Why These Characteristics Matter

Together, these features make foundation models the driving force behind generative AI’s explosion in 2025 and beyond. They allow a single AI system to:

- Write, design, analyze, and converse.

- Scale across industries from medicine to entertainment.

- Learn and adapt faster than any previous generation of AI.

Applications of Foundation Models in Generative AI

Foundation models are not just technological marvels—they are reshaping entire industries by enabling real-world applications of generative AI. Their ability to analyze, adapt, and create makes them valuable across domains ranging from business and healthcare to entertainment and education. Below, we’ll explore some of the most impactful use cases.

1. Content Creation and Media

Perhaps the most visible application of foundation models is in creative industries. Generative AI is now a partner in producing text, images, video, and audio.

- Text Generation: Tools like GPT can draft articles, blogs, and marketing copy in seconds.

- Image Creation: Models like DALL·E and Stable Diffusion create high-quality illustrations, concept art, or product designs.

- Video & Animation: AI systems generate storyboards or even short films.

- Music and Sound: Generative models can compose music or design sound effects tailored to specific moods.

💡 Impact: Businesses save time and money, while creators gain powerful tools to amplify their imagination.

2. Healthcare and Life Sciences

Foundation models are revolutionizing medical research, diagnostics, and personalized treatment.

- Medical Imaging: Computer vision models assist radiologists in detecting diseases in X-rays, MRIs, and CT scans.

- Drug Discovery: Generative models suggest new molecular structures, drastically reducing the time to identify potential drug candidates.

- Virtual Assistants: AI chatbots guide patients, answer medical queries, and provide preliminary triage.

- Genomics: AI models analyze genetic data to predict disease risks or design precision therapies.

💡 Impact: Healthcare becomes more precise, faster, and accessible, improving patient outcomes worldwide.

3. Business and Customer Engagement

Foundation models play a crucial role in transforming business operations and customer experiences.

- Chatbots and Virtual Agents: AI assistants handle customer inquiries 24/7 with human-like responsiveness.

- Personalized Marketing: AI generates targeted recommendations, product descriptions, and ad campaigns.

- Knowledge Management: Models summarize company reports, extract key insights, and support decision-making.

- Process Automation: AI drafts legal contracts, writes financial summaries, or analyzes sales data.

💡 Impact: Businesses streamline workflows, reduce costs, and deliver more tailored services.

4. Education and Training

Foundation models are reshaping how people learn and acquire skills.

- AI Tutors: Personalized tutors adapt lessons based on a learner’s strengths and weaknesses.

- Content Generation: AI creates quizzes, practice problems, and study guides.

- Language Learning: NLP models translate texts, correct grammar, and help students practice conversation.

- Simulation Training: In fields like aviation or medicine, AI-powered virtual simulations provide realistic practice environments.

💡 Impact: Education becomes more inclusive, adaptive, and scalable.

5. Science and Research

AI is a game-changer in accelerating scientific discovery.

- Data Analysis: Foundation models process massive datasets in physics, chemistry, and astronomy.

- Hypothesis Generation: AI proposes new theories or relationships that scientists can test.

- Research Summarization: Models scan thousands of papers to generate literature reviews.

- Climate Modeling: AI enhances predictions of environmental changes and helps in sustainability planning.

💡 Impact: Scientists move from data gathering to insight generation more efficiently.

6. Software Development and Engineering

AI is becoming a co-pilot for developers.

- Code Generation: Models like Codex can write code in multiple programming languages.

- Debugging and Testing: AI suggests fixes and creates automated test cases.

- Documentation: Foundation models generate readable explanations of codebases.

- Low-Code/No-Code Development: Non-programmers can build apps using natural language instructions.

💡 Impact: Software creation becomes faster, more accessible, and less error-prone.

7. Gaming and Entertainment

Generative AI is expanding creative possibilities in interactive entertainment.

- Procedural Content Generation: AI builds game levels, quests, and narratives on the fly.

- Character Development: NPCs (non-playable characters) now engage in lifelike conversations.

- Storytelling: Games adapt dynamically to a player’s choices using AI-driven narratives.

- Film & Animation: Studios experiment with AI to design scripts, special effects, and CGI characters.

💡 Impact: Entertainment becomes more immersive and personalized.

8. Finance and Banking

Foundation models assist with financial decision-making and risk management.

- Fraud Detection: AI analyzes transaction patterns to flag unusual activity.

- Market Forecasting: Models process financial data and news to predict market movements.

- Customer Service: Virtual assistants handle loan inquiries, investment advice, and account troubleshooting.

- Document Processing: AI streamlines compliance by analyzing contracts and legal texts.

💡 Impact: Financial institutions enhance security, efficiency, and customer trust.

9. Security and Defense

In national security, cybersecurity, and defense, foundation models offer critical applications.

- Threat Detection: AI analyzes data streams for suspicious activities or potential cyberattacks.

- Autonomous Systems: Used in drones and robotics for surveillance and operations.

- Disinformation Analysis: NLP models track and counter fake news or malicious online campaigns.

- Strategic Planning: AI aids military simulations and predictive modeling.

💡 Impact: AI strengthens security infrastructure while raising ethical and governance challenges.

10. Everyday Tools and Productivity

Finally, foundation models enhance daily productivity tools we use.

- Smart Assistants: AI integrates into calendars, email, and project management.

- Writing Tools: Grammarly-like applications powered by NLP improve communication.

- Search Engines: Generative search delivers direct, context-aware answers instead of static links.

- Accessibility: Speech-to-text and text-to-speech tools assist differently-abled users.

💡 Impact: AI becomes a seamless part of everyday life, boosting efficiency and inclusivity.

Why These Applications Matter

Foundation models are not confined to a single domain—they are general-purpose enablers. Whether in business, science, or daily life, their applications show how generative AI is not just a trend but a transformative force.

Challenges and Limitations of Foundation Models

While foundation models in generative AI hold enormous potential, they also face serious challenges and limitations that affect their effectiveness, fairness, and long-term adoption. Understanding these concerns is critical for businesses, researchers, and policymakers who want to harness their power responsibly.

1. Computational Costs and Energy Consumption

Training foundation models requires immense computational resources. For example, large-scale models often need thousands of GPUs running for weeks or even months.

- High Cost: Training a state-of-the-art model can cost millions of dollars in computing power.

- Environmental Impact: The energy consumption leads to significant carbon footprints.

- Barrier to Entry: Smaller companies and research labs struggle to compete due to limited resources.

💡 Implication: Without efficiency improvements, foundation models risk becoming tools accessible only to large tech corporations.

2. Bias in Training Data

Foundation models learn from vast datasets collected from the internet, which naturally contain human biases and stereotypes.

- Gender and Racial Bias: Models may generate discriminatory or offensive content.

- Cultural Limitations: They may misrepresent or underrepresent minority communities.

- Misinformation Spread: Training data may include inaccurate or misleading content, which the model can reproduce.

💡 Implication: If unchecked, biased outputs could reinforce inequality and erode trust in AI systems.

3. Hallucinations and Accuracy Issues

Generative AI models sometimes “hallucinate” information—producing outputs that sound confident but are factually incorrect.

- Incorrect Answers: AI can fabricate references, data, or events.

- Overconfidence: The natural fluency of generated text makes errors harder to detect.

- Risk in Sensitive Domains: In healthcare, law, or finance, hallucinations can lead to dangerous consequences.

💡 Implication: Users must fact-check AI outputs before relying on them for critical decisions.

4. Lack of Transparency and Explainability

Foundation models are often described as “black boxes” because their decision-making processes are difficult to interpret.

- Complexity: Billions of parameters make it hard to understand how predictions are made.

- Accountability Issues: Without explainability, it’s difficult to assign responsibility for errors.

- Regulatory Challenges: Governments require explainable AI in high-risk sectors like healthcare and defense.

💡 Implication: Greater transparency and interpretability are essential for safe and ethical deployment.

5. Security and Misuse Risks

Powerful models can be weaponized for malicious purposes.

- Deepfakes: AI can generate realistic but fake images, videos, or voices.

- Cybersecurity Threats: Models may be exploited to create phishing emails or malicious code.

- Propaganda: Generative AI can mass-produce disinformation at scale.

- Model Theft: Competitors or bad actors may steal models through cyberattacks.

💡 Implication: Security protocols and monitoring systems are crucial to prevent misuse.

6. Intellectual Property and Copyright Concerns

Generative AI blurs the line between original and derivative work.

- Content Ownership: Who owns AI-generated music, art, or code?

- Training Data Issues: Models often train on copyrighted works without explicit permission.

- Legal Battles: Artists, authors, and companies are increasingly suing AI firms over copyright violations.

💡 Implication: Clear legal frameworks are needed to balance innovation with creator rights.

7. Limited Generalization in Real-World Scenarios

Despite their vast training data, foundation models may fail in specific, real-world tasks.

- Contextual Misunderstanding: AI may misinterpret subtle cultural or domain-specific nuances.

- Out-of-Distribution Data: Models struggle when facing situations not seen during training.

- Overfitting: They may generate overly generic or repetitive outputs instead of contextually relevant ones.

💡 Implication: Models must be fine-tuned and rigorously tested for specialized applications.

8. Accessibility and Inequality

The benefits of foundation models are not distributed evenly.

- Language Barriers: Many models are biased toward English and underperform in low-resource languages.

- Digital Divide: Wealthier nations and corporations adopt AI faster than developing economies.

- Exclusivity: Proprietary models limit access for researchers and smaller businesses.

💡 Implication: Democratizing access to AI is key to ensuring global benefits.

9. Ethical and Societal Concerns

Foundation models raise deep ethical questions about human-AI interaction.

- Job Displacement: Automation may threaten roles in content creation, customer service, and programming.

- Authenticity: Human creativity and originality may be overshadowed by mass-produced AI content.

- Dependency: Over-reliance on AI could weaken critical thinking and problem-solving skills.

💡 Implication: Society must strike a balance between leveraging AI’s benefits and protecting human values.

10. Regulatory and Governance Challenges

Governments worldwide are still developing policies to regulate generative AI.

- Lack of Standards: No universal framework exists for auditing AI safety.

- Cross-Border Issues: Global use of AI creates jurisdictional conflicts.

- Rapid Innovation: Regulation often lags behind technological progress.

💡 Implication: Strong governance, international collaboration, and AI ethics boards are needed.

Why These Limitations Matter

Foundation models are not flawless. Recognizing their limitations ensures that they are deployed responsibly and effectively. By addressing issues like bias, security risks, and environmental costs, society can maximize the advantages of generative AI while minimizing harm.

The Future of Foundation Models in Generative AI

The story of foundation models is still unfolding. As we move deeper into the age of generative AI, the next decade will be shaped by innovations that make these systems more efficient, ethical, accessible, and human-centric. The future promises both exciting opportunities and pressing challenges.

1. Efficiency and Sustainability

One of the biggest priorities for the future of foundation models is reducing computational costs and energy consumption.

- Smaller, Smarter Models: Researchers are focusing on parameter-efficient architectures that deliver comparable performance at a fraction of the size.

- Green AI Initiatives: More eco-friendly training methods, such as energy-efficient hardware and renewable-powered data centers, are gaining traction.

- Model Distillation: Techniques like knowledge distillation will allow large models to transfer capabilities to smaller, faster ones.

💡 Outlook: The future of AI is not just about building “bigger” models but creating leaner, sustainable systems that everyone can use.

2. Democratization of AI Access

Currently, the most powerful foundation models are controlled by a handful of big tech companies. This may change.

- Open-Source Models: Projects like LLaMA and BLOOM are making advanced AI accessible to researchers and startups.

- Decentralized AI: Blockchain-powered AI ecosystems could reduce reliance on centralized tech giants.

- Global Inclusion: Efforts are underway to build models for low-resource languages and culturally diverse datasets.

💡 Outlook: Wider access will accelerate innovation and empower smaller players worldwide to harness AI.

3. Multimodal and Cross-Domain Capabilities

The future of foundation models lies in multimodal AI, which can seamlessly process and generate text, images, audio, video, and code.

- Unified Models: Instead of using separate tools for images, text, and speech, future systems will integrate multiple modalities.

- Creative AI Assistants: Generative AI will evolve into all-in-one creative partners, capable of producing a movie script, generating visuals, composing background music, and coding an interactive experience.

- Cross-Domain Intelligence: Advanced models will bridge fields like science, law, and healthcare, offering specialized insights across disciplines.

💡 Outlook: Multimodality will make AI more intuitive, human-like, and versatile.

4. Human-AI Collaboration

The narrative is shifting from “AI replacing humans” to “AI augmenting humans.”

- Co-Creation Tools: Writers, artists, programmers, and marketers will use AI as collaborators, not competitors.

- Decision Support: Professionals in medicine, law, and engineering will rely on AI for data-driven recommendations while retaining final authority.

- Personalized Assistants: Future AI will adapt to individual preferences, learning styles, and work habits.

💡 Outlook: The strongest future AI models will amplify human potential rather than diminish it.

5. Regulation and Ethical Guardrails

Governments and organizations are actively working on AI governance frameworks to ensure safety and fairness.

- AI Auditing: Mandatory evaluations will check for bias, accuracy, and explainability.

- Usage Boundaries: Policies will define where and how AI can be used (e.g., banning deepfakes in elections).

- Ethical AI Principles: Fairness, transparency, and accountability will become baseline expectations.

💡 Outlook: By 2030, global AI regulations may exist, similar to international laws on climate or trade.

6. Customization and Personalization

Foundation models will evolve from one-size-fits-all tools into highly personalized systems.

- Domain-Specific Models: Specialized models for healthcare, law, or finance will outperform general-purpose ones in accuracy.

- Personal AI Agents: Individuals may have their own AI twin, trained on personal data to act as a digital extension of themselves.

- Adaptive Learning: AI will continuously learn from user interactions while respecting privacy controls.

💡 Outlook: AI will transition from being a “universal assistant” to a deeply personal companion.

7. Enhanced Explainability and Trust

For foundation models to achieve mass adoption, they must become more transparent.

- Explainable AI (XAI): Future models will include built-in features that explain why they make specific predictions.

- Confidence Scores: AI will indicate the reliability of its answers, helping users fact-check more effectively.

- Open Datasets: Greater transparency about training data sources will foster trust.

💡 Outlook: Trustworthy AI will be the norm, not the exception.

8. Integration with Emerging Technologies

Foundation models won’t exist in isolation—they will integrate with other cutting-edge fields.

- AI + Robotics: Smarter robots powered by generative AI will enhance manufacturing, healthcare, and home automation.

- AI + IoT: Connected devices will use AI to predict, optimize, and automate household and industrial functions.

- AI + Quantum Computing: Quantum breakthroughs may revolutionize model training, making foundation models exponentially faster.

💡 Outlook: The synergy between AI and other technologies will reshape industries and daily life.

9. Addressing Societal Impact

Foundation models will force society to confront bigger-picture challenges.

- Workforce Transformation: Upskilling programs will be essential to prepare workers for AI-augmented jobs.

- Education Revolution: Classrooms may use AI tutors to provide personalized learning at scale.

- Cultural Shifts: AI-generated content will influence art, media, and entertainment, raising questions about authenticity and creativity.

💡 Outlook: The societal impact of AI will depend not only on technology but on how humans choose to use it.

10. The Path Ahead

Looking forward, the evolution of foundation models will follow three guiding principles:

- Smarter – AI will become more efficient, accurate, and adaptable.

- Safer – Ethical frameworks will guide usage, reducing harm and bias.

- Shared – Access will expand, ensuring that benefits reach people globally.

Key Takeaways and FAQs

Foundation models are at the heart of the AI revolution. From powering chatbots and image generators to transforming industries like healthcare, finance, and education, these large-scale models are reshaping how humans interact with technology. But their impact extends far beyond innovation—they raise questions about ethics, governance, sustainability, and inclusivity.

This section highlights the most important takeaways and addresses frequently asked questions to provide clarity on the role of foundation models in our future.

Key Takeaways

- Foundation models are general-purpose AI systems trained on massive datasets, capable of performing multiple downstream tasks with minimal fine-tuning.

- Generative AI is fueled by foundation models, enabling text, image, audio, and video creation across diverse applications.

- Top players like OpenAI, Google DeepMind, Anthropic, and Meta are leading development, but open-source projects are democratizing access.

- Ethical challenges such as bias, misinformation, and energy consumption remain central concerns.

- Future models will be multimodal, personalized, explainable, and sustainable, moving from “bigger” to “smarter” AI.

- Collaboration between humans and AI will define success, emphasizing augmentation over replacement.

- Global regulations and governance are inevitable to ensure responsible deployment.

FAQs on Foundation Models in Generative AI

1. What is a foundation model?

A foundation model is a large-scale AI system trained on massive datasets, designed to serve as a base for multiple downstream applications like chatbots, translators, or image generators.

2. How do foundation models differ from traditional AI models?

Traditional AI models are usually task-specific (e.g., detecting spam emails). Foundation models, by contrast, are general-purpose and can be fine-tuned for a wide range of tasks.

3. What are some examples of foundation models?

Examples include GPT-4 and GPT-5 (OpenAI), Gemini (Google DeepMind), Claude (Anthropic), LLaMA (Meta), and open-source initiatives like BLOOM.

4. Why are foundation models important for generative AI?

They provide the underlying architecture that powers generative AI applications—chatbots, creative assistants, image generators, and even multimodal systems.

5. What are the ethical concerns with foundation models?

Major concerns include:

- Bias from training data

- Misinformation and deepfakes

- Data privacy risks

- Environmental impact due to high energy use

6. Will AI foundation models replace human jobs?

They are more likely to augment human work than replace it. Routine tasks may be automated, but new roles in AI oversight, creativity, and ethics will emerge.

7. How do foundation models impact different industries?

- Healthcare: Early disease detection, medical image analysis

- Finance: Fraud detection, personalized financial advice

- Education: AI tutors, personalized learning tools

- Entertainment: Music, art, and content creation at scale

8. What is the future of foundation models?

The future points toward smaller, more efficient, multimodal, and explainable systems. Personal AI assistants, open-source democratization, and tighter global regulation will define the next decade.

9. Who controls the most powerful foundation models today?

Currently, large tech companies—OpenAI, Google, Anthropic, and Meta—dominate the space. However, open-source models are expanding access.

10. How can businesses leverage foundation models?

Brands can use foundation models to automate workflows, personalize customer experiences, analyze data, and create content—all while reducing operational costs.

Final Word

Foundation models are not just another step in AI advancement—they are the cornerstone of generative AI’s future. By balancing innovation with ethics, efficiency, and inclusivity, they will evolve from powerful prototypes into trusted global tools that transform everyday life.

Read Also: