In the expansive realm of the digital landscape, web crawlers play an essential role in collecting and structuring information from across the internet. OpenAI’s GPTBot web crawler is a prime example of such technology, designed to amass knowledge and enhance the capabilities of AI models like ChatGPT. However, not all welcome the presence of this crawler on their sites, and efforts to manage its impact have sparked discussions concerning data privacy, intellectual property rights, and website security. In this guide, we will delve into the universe of web crawling, explore the mechanics of GPTBot, and provide actionable strategies for website proprietors to safeguard their online assets.

Understanding Web Crawling

The Inner Workings of a Web Crawler At its core, a web crawler, also referred to as a spider or search engine bot, is an automated program navigating the vast digital expanse, scouring websites for information. It meticulously organizes this data in a structured format that is easily digestible by search engines. Visualize it as a diligent librarian meticulously cataloging the extensive repository of the internet.

The Role of Web Crawlers

Web crawlers play a critical role in indexing pertinent URLs, prioritizing websites that hold authority and relevance for specific search inquiries. For instance, if you’re seeking a solution to a Windows error, your chosen search engine’s web crawler will sift through URLs from reputable sources on Windows errors.

OpenAI’s GPTBot Web Crawler

OpenAI’s GPTBot, a web crawling bot, has been developed to enhance AI models such as ChatGPT. Through the process of scraping data from websites, it contributes to refining AI models, making them safer, more precise, and capable of expanded functionalities. Armed with the ability to identify and extract valuable insights from web pages, GPTBot actively contributes to the evolution of AI technologies.

Read Also: Top 10 Free Reverse Phone Lookup Websites to Unmask Mystery Callers

The Imperative for Website Protection

Navigating Divergent Interests

While users embrace AI models like ChatGPT for their wealth of information, website proprietors harbor differing perspectives. The introduction of GPTBot has given rise to concerns among creators of websites, who are apprehensive about potential misappropriation of their content without appropriate attribution or website visits. This predicament underscores the intricate balance between advancing AI and upholding content creators’ rights.

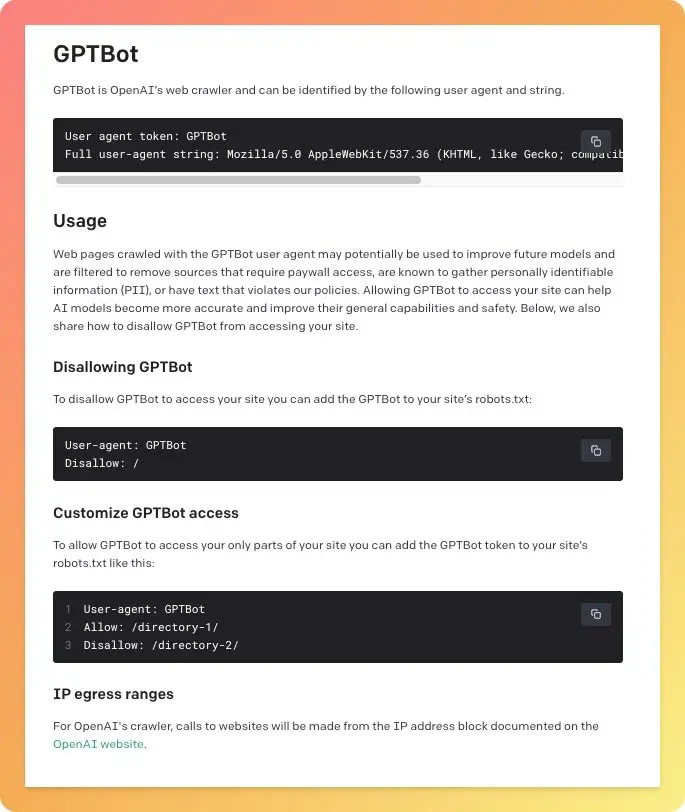

Leveraging the robots.txt File

The robots.txt file serves as a tool to govern GPTBot’s behavior on your website. It enables the following actions:

Complete Cessation of GPTBot

By configuring the robots.txt file, you can thwart GPTBot’s access to your entire website, preserving maximum privacy.

Selective Page Blocking

For specific web pages that you wish to shield from GPTBot’s scrutiny, you can stipulate these pages within the robots.txt file. This facilitates a balance between privacy and dissemination of information.

Guiding GPTBot’s Path

The robots.txt file can also serve as a roadmap for GPTBot, indicating which links it can traverse and which ones it should circumvent.

Safeguarding Your Website Against OpenAI’s Web Crawler

To govern GPTBot’s activities on your website, adhere to these steps:

Complete Cessation

Establish the robots.txt file on your website’s server.

Edit the file employing a text editing tool.

Include the subsequent lines to prevent GPTBot’s access:

User-agent: GPTBot

Disallow: /

Selective Page Blocking

Set up the robots.txt file on your website’s server.

Modify the file using your preferred text editing utility.

To block specific directories, utilize lines akin to:

User-agent: GPTBot

Allow: /directory-1/

Disallow: /directory-2/

Choice Empowerment: Opt-Out and Protection OpenAI’s Opt-Out Option OpenAI acknowledges the concerns of website proprietors and extends an opt-out mechanism. This provision empowers website creators to exert influence over how their content is utilized and accessed by AI models.

Shielding Your Digital Territory

To shield your website from OpenAI’s GPTBot web crawler and maintain dominion over your online content, contemplate the following measures:

Customized robots.txt: Employ the robots.txt file to oversee GPTBot’s access, utilizing the “Disallow: /” directive to prevent access.

Tailored Access Control: Customize GPTBot’s access by devising personalized directives within the robots.txt file, dictating the pages the bot can explore.

Web Application Firewall (WAF): Invest in a WAF to fortify your website’s security, effectively thwarting diverse online threats, including web crawlers.

Vigilant Monitoring: Regularly monitor your website’s traffic patterns to pinpoint anomalous spikes or trends that might signify unwarranted crawling activity.

By implementing these strategies, you can effectively fortify your website against OpenAI’s GPTBot web crawler and retain authority over your digital domain.

In Conclusion

Exerting control over GPTBot’s interaction with your website is a pivotal measure in preserving your content’s privacy and safeguarding your intellectual property. Through adept utilization of the robots.txt protocol, you can delineate which sections of your website are open for exploration and which remain beyond the reach of web crawlers.