MLOps vs DevOps presents one of the most important distinctions for engineering teams working with machine learning today. You’ve likely seen how many machine learning projects fail to make it from prototype to production: nearly half never reach deployment. While DevOps practices have revolutionised traditional software development, machine learning introduces unique challenges that require a specialised approach.

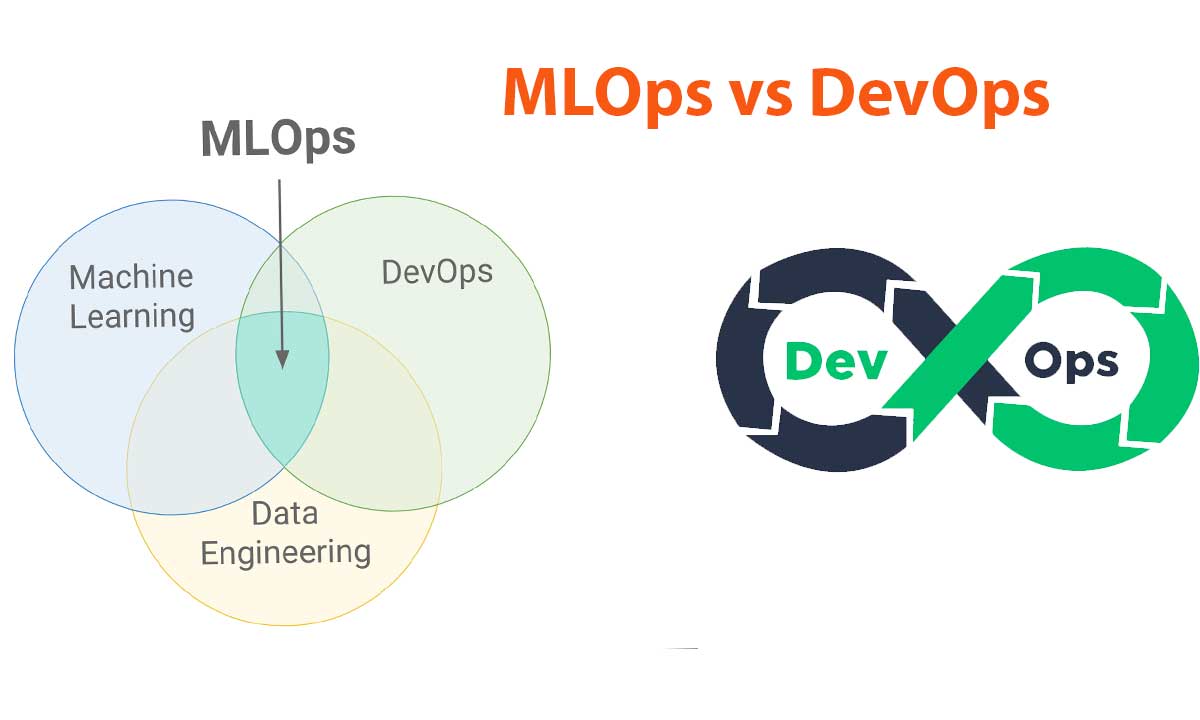

What is MLOps exactly? It’s essentially an extension of DevOps principles, tailored specifically for machine learning workflows. DevOps breaks down silos between development and operations teams, but MLOps goes further by integrating data scientists into this collaborative framework. The difference becomes clear when you consider what each approach manages, like traditional DevOps handles software code, whereas machine learning DevOps must additionally track data-driven artefacts, evolving models, and complex data dependencies. Whether you’re considering an MLOps course to upskill your team or simply trying to understand how these methodologies compare, the distinctions matter significantly for your engineering success.

Throughout this guide, we’ll explore how these approaches differ in practical terms and why understanding their unique workflows can help you successfully implement machine learning in production environments.

Core Concepts: What is MLOps vs DevOps?

DevOps and MLOps represent two distinct yet interconnected approaches to streamlining development workflows. Understanding the fundamental differences between these concepts is crucial for engineering teams working with machine learning systems.

DevOps focuses primarily on automating and optimizing the software development lifecycle. It brings together software development and IT operations teams to enable faster, more reliable software delivery. At its core, DevOps emphasises collaboration, automation, and continuous delivery to accelerate software development while maintaining high quality. Key practices include continuous integration, continuous delivery, infrastructure as code, and automated testing, all designed to reduce time to market and enhance product quality.

In contrast, MLOps (Machine Learning Operations) extends DevOps principles specifically for machine learning workflows. If you’re considering an MLOps course to enhance your team’s capabilities, you’ll discover that MLOps addresses the unique challenges that arise when developing and deploying ML models. Unlike traditional software, machine learning systems require special handling of data pipelines, model training, and monitoring for performance drift.

Moreover, MLOps encompasses several distinct components not found in traditional DevOps:

- Data engineering and preparation

- Model development and validation

- Deployment with version control for both code and data

- Continuous monitoring for model drift and performance

Fundamentally, you can think of MLOps as a specialised subset of DevOps tailored for machine learning projects. While both aim to place software in repeatable and fault-tolerant workflows, MLOps must account for the experimental nature of model development.

Furthermore, the team composition differs notably. Traditional DevOps involves software engineers and operations specialists, whereas MLOps teams typically include data scientists alongside MLOps engineers who handle deployment and monitoring. Many organisations investing in MLOps training find this collaborative approach essential for successfully productionalizing machine learning applications.

Indeed, as machine learning becomes increasingly critical to business operations, understanding these distinctions helps engineering teams implement appropriate practices for their specific needs.

Key Differences in Workflow and Lifecycle

The workflow differences between MLOps and DevOps reveal why traditional software practices often fall short for machine learning projects. Although both methodologies share foundational principles, their execution varies considerably due to the unique nature of ML systems.

Process Complexity

The MLOps process encompasses three broad phases, design, experimentation, and operations, compared to DevOps’ more linear approach. When taking an MLOps course, you’ll discover that machine learning development is inherently more experimental and research-oriented. Data scientists might run multiple parallel experiments before deciding which model to promote to production, a pattern rarely seen in conventional software development.

Artefact Management

DevOps primarily manages static artefacts like source code and configuration files. Conversely, MLOps must track dynamic components, including:

- Data sets used for training

- Model building code

- Experiment configurations

- Hyperparameters

- Performance metrics

This expanded scope makes version control substantially more complex for ML systems, as each experimental run requires thorough documentation for future reproducibility and auditing.

Lifecycle Differences

The DevOps lifecycle focuses on build, test, deploy, and monitor stages, with application behaviour remaining relatively predictable once deployed. In contrast, MLOps introduces additional stages such as:

- Data preprocessing and feature engineering

- Model training and validation

- Continuous retraining

- Drift monitoring

Since ML models can degrade over time as data patterns change, MLOps training emphasises continuous monitoring for model drift, something unnecessary in traditional software systems.

Maturity Levels

MLOps maturity progresses through distinct levels, beginning with manual processes for teams just starting with ML, then advancing to pipeline automation, and ultimately reaching CI/CD pipeline automation for organisations needing frequent model updates. Each level increases automation of the data, model, and code pipelines, correspondingly improving the velocity of model training and deployment.

Understanding these workflow distinctions helps engineering teams implement appropriate processes tailored to machine learning’s unique challenges rather than simply applying DevOps practices that weren’t designed for data-driven applications.

Tooling and Infrastructure for ML DevOps

The tooling landscape for ML DevOps reflects the unique challenges that machine learning projects present compared to traditional software development. Consequently, engineering teams must select tools that address both software delivery and model lifecycle management.

Unlike traditional DevOps, which primarily focuses on code management and deployment, MLOps requires specialised tools for data versioning, experiment tracking, and model monitoring. Hence, many organisations investing in an MLOps course discover they need to expand their toolchain beyond conventional DevOps solutions.

For experiment tracking and model development, MLflow and Weights & Biases have become industry standards. These tools allow data scientists to record parameters, metrics, and artefacts in a centralised platform. Simultaneously, data versioning tools like DVC (Data Version Control) manage dataset changes with the same rigour that Git manages code changes.

Pipeline orchestration presents another critical difference between MLOps vs DevOps tooling requirements. While DevOps might use Jenkins or GitLab CI/CD for code pipelines, ML DevOps typically employs specialised orchestrators like Apache Airflow, Kubeflow, or Dagster to manage complex data and training workflows.

Regarding infrastructure management, both disciplines use Infrastructure as Code (IaC) tools such as Docker, Terraform, and Kubernetes. Nevertheless, MLOps often requires additional provisioning for specialised hardware like GPUs and infrastructure for handling large datasets.

End-to-end MLOps platforms have also emerged to streamline the entire workflow. Tools like Dataiku and AWS SageMaker provide comprehensive environments that integrate data preparation, model development, and deployment capabilities. These platforms are often highlighted in MLOps training programmes as they simplify the implementation of MLOps practices.

For monitoring, while DevOps uses tools like Prometheus and Grafana to track system performance, ML DevOps extends this to monitor model drift and data quality metrics as well.

The choice of tooling ultimately depends on your team’s specific needs and existing technology stack. Regardless of which tools you select, the goal remains consistent: creating reproducible, scalable, and maintainable machine learning systems that deliver value in production environments.

Comparison Table

| Aspect | DevOps | MLOps |

| Primary Focus | Software development lifecycle automation | Machine learning workflow optimisation with data-driven components |

| Team Composition | Software engineers and operations specialists | Data scientists, MLOps engineers, and operations specialists |

| Artefact Management | Source code and configuration files | Data sets, model code, experiment configurations, hyperparameters, and performance metrics |

| Workflow Pattern | Linear development approach | Experimental and research-oriented with parallel experiments |

| Lifecycle Stages | Build, test, deploy, monitor | Data preprocessing, feature engineering, model training, validation, deployment, continuous retraining, drift monitoring |

| Version Control Focus | Code management | Code, data, and model versioning |

| Monitoring Scope | System performance and application behaviour | System performance, model drift, and data quality metrics |

| Infrastructure Requirements | Standard computing resources | Specialised hardware (e.g., GPUs) and infrastructure for large datasets |

| Pipeline Tools | Jenkins, GitLab CI/CD | Apache Airflow, Kubeflow, Dagster |

| Core Objectives | Faster, reliable software delivery | Reproducible, scalable machine learning systems in production |

Conclusion

While MLOps and DevOps share roots, they serve distinct purposes. MLOps extends DevOps to tackle the complexities of machine learning, like managing data, tracking experiments, and monitoring model performance. Unlike traditional software, ML systems require specialised workflows and tools.

If your organisation is scaling ML, an MLOps course can equip your team with the right skills. While DevOps offers a foundation, MLOps adds crucial layers like data handling and model lifecycle management.

Ultimately, adapting to MLOps is key to successfully deploying ML models. Teams that embrace it experience fewer failures and more consistent outcomes, turning prototypes into real business value.